CTAD: Adaptive Antibody Trial to Try Bayesian Statistics

Quick Links

Scientists who traveled to Monaco to learn about new Alzheimer's drugs and trials discovered that, solanezumab and bapineuzumab aside (see Part 2 of this series), the program of the 5th Clinical Trials in Alzheimer’s Disease (CTAD) conference was mostly stocked with methodological topics. This may not be surprising, as it reflects a field moving to implement a better paradigm for drug testing. And wedged in between talks on cognitive composites, standardization, and power calculations, the program did feature some news on the next wave of potential treatments. For example, one upcoming trial is venturing into the brave new world of Bayesian statistics (see below). Another will enrich by ApoE genotype (see Part 5 of this series).

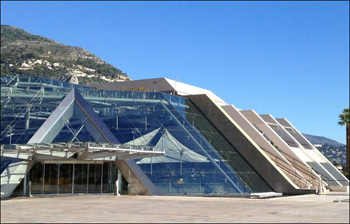

The Grimaldi Forum Convention Center, Monte Carlo. Image courtesy of Gabrielle Strobel

The news and blogosphere last week were abuzz with post-election chatter about how Bayesian probability allowed a statistics geek to call last week’s U.S. presidential contest—in all 50 states and down to the number of electoral votes—long before November 6 (e.g., Phys.org; Times Live). In the afterglow of this sudden popularity, Bayesian analysis is on the rise in the field of Alzheimer’s research as well. That’s because—stumped by standard designs again and again—AD researchers this past year started experimenting with Bayesian adaptive trials. The API, DIAN, and ADCS A4 trials are dipping their toes into the waters by incorporating adaptive elements. At CTAD, Andy Satlin of Eisai Inc. in Woodcliff Lake, New Jersey, described how his team is set to test a new antibody with a headfirst plunge into the depths of Bayesian probability—which statisticians have described as more than a little intimidating.

For a layperson’s description of how adaptive trials work, see this ARF related news story. Put facetiously, it’s a bit like the trialists’ version of governing by poll. Adaptive trials don’t execute a fixed design, i.e., stick to every detail no matter how the trial is going. Instead, they take the trial’s temperature periodically and adjust certain things. Underpinning such designs are Bayesian statistics. Combining that with information on the disease’s natural history and progression, scientists build a model that forecasts a variety of potential drug responses and assigns probability distributions to each envisioned scenario. As the trial unfolds and initial data come in, the model adjusts parameters such as randomization, for example.

Adaptive trials came onto the scene as Eisai scientists were planning the Phase 2 evaluation of BAN2401. This is an antibody against Aβ protofibrils that Eisai licensed from BioArctic Neuroscience, the Swedish biotech company co-founded by the discoverer of the Arctic APP mutation, Lars Lannfelt of Uppsala University (see ARF related news story). To advance this new type of therapy, Eisai scientists need to answer questions such as which of many possible doses to choose, how often to dose, and what effect size they could reasonably expect—in other words, how many patients they would need. “Answering these with a fixed Phase 2 design could result in a lengthy, expensive failure in Phase 3,” Satlin told the audience. Adaptive designs, in theory, can cut down the time to decide whether the drug is likely effective and settle on doses for Phase 3.

Working with Don Berry, of the University of Texas MD Anderson Cancer Center in Houston and a leader in adaptive trial design, the Eisai scientists spent months building a statistical model for how this could work. They will start the trial with a "burn in," meaning the first 200 enrollees get randomized in a fixed distribution either to placebo or to one of five doses of antibody. Then the "polling" begins; that is, every time 50 additional patients are enrolled, the researchers will conduct an interim analysis. If the trial continues to full enrollment of 800 patients, then the scientists will conduct quarterly analyses.

These interim analyses are there to do three things, all while the scientists at Eisai remain blinded to the interim results. While the trial enrolls, they will guide the reallocation of disproportionately more subsequent enrollees to those doses that seem to generate the best response, and relatively fewer to the inactive or toxic doses. Once as few as 200 patients are randomized, interim analysis can indicate that the drug is not working and trialists can then end the trial. Once 350 people are randomized, the sponsor can declare success and stop the trial. This trial model allows scientists to find out whether the drug likely works and which doses to take into Phase 3 with an estimated 200 fewer patients and up to a year earlier than if the trial followed a fixed design, Satlin said, “The goal is to find the most effective dose with fewer subjects by adapting the randomization.”

Regulators have encouraged the field to use adaptive designs since 2004. That is when the Food and Drug Administration’s Janet Woodcock and others issued the Critical Path Initiative in an effort to bridge the translational gap between rapid scientific advances and stagnating drug approval. The cancer field heeded the call before Alzheimer’s, but this is now changing.

Besides using a new design, the BAN2401 Phase 2 program will use a different outcome measure. At CTAD, Veronika Logovinsky of Eisai described a new composite scale. It is stitched together from individual tests of established scales that have been the mainstay of AD drug trials but are increasingly seen as inadequate for upcoming trials in earlier stages of AD. Working with others at Eisai and with Suzanne Hendrix, an independent biostatistician in Salt Lake City, Utah, Logovinsky pressure-tested how each component of the ADAS-cog, the MMSE, and the CDR performed in previous MCI trials done by Eisai, by the Alzheimer’s Disease Cooperative Study, and in ADNI. They picked the tests that were least heterogeneous and most sensitive to disease progression and to drug effects at the predementia stage. The new composite uses four items from the ADAS-cog, two from the MMSE, and all six from the CDR, Logovinsky said. Eisai hopes that regulators will accept this combination of some cognitive and some clinical/functional tests as a single outcome measure.

Beyond this study, similar initiatives are afoot across academia and industry. Up and down the field, neuropsychologists and biostatisticians, partly inspired by Hendrix’s work, are probing placebo samples from prior drug trials and natural history datasets for test combinations that detect the most change at the MCI stage with the least noise (e.g., ARF related news story).—Gabrielle Strobel.

This is Part 4 of a seven-part series. See also Part 1, Part 2, and Part 3, Part 5, Part 6, and Part 7. Read a PDF of the entire series.

References

News Citations

- CTAD: New Data on Sola, Bapi, Spark Theragnostics Debate

- CTAD: ApoE Carriers Sought for Trial of NSAID Derivative

- Can Adaptive Trials Ride to the Rescue?

- Barcelona: Antibody to Sweep Up Aβ Protofibrils in Human Brain

- Detecting Familial AD Ever Earlier: Subtle Memory Signs 15 Years Before

- CTAD: Turning the Ship Around Toward Early Trials

- CTAD: Regulatory Science Gains Prominence in AD Research

- CTAD: EEG Gains Luster as More Trials Incorporate Biomarkers

- CTAD: AD Treatment Might Not Lower Healthcare Costs

Other Citations

External Citations

Further Reading

News

- Can Adaptive Trials Ride to the Rescue?

- Barcelona: Antibody to Sweep Up Aβ Protofibrils in Human Brain

- CTAD: Turning the Ship Around Toward Early Trials

- CTAD: New Data on Sola, Bapi, Spark Theragnostics Debate

- CTAD: Regulatory Science Gains Prominence in AD Research

- Detecting Familial AD Ever Earlier: Subtle Memory Signs 15 Years Before

Annotate

To make an annotation you must Login or Register.

Comments

No Available Comments

Make a Comment

To make a comment you must login or register.